Technical Approach

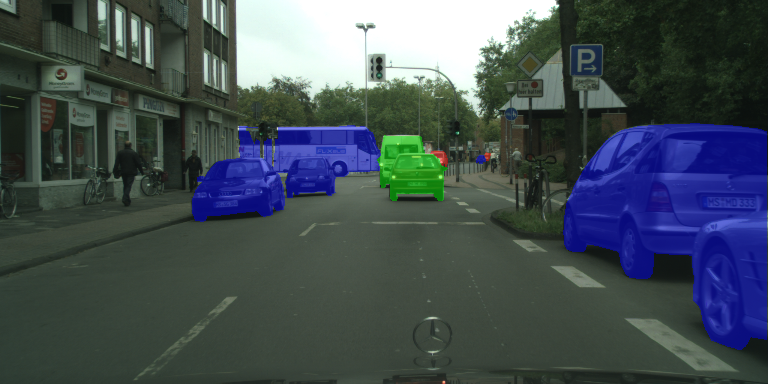

In this work, we propose a composite deep convolutional neural network architecture that learns to predict both the semantic category and motion status of each pixel from a pair of consecutive monocular images. The composition of our SMSnet architecture can be deconstructed into three components: a section that learns motion features from generated optical flow maps, a parallel section that generates features for semantic segmentation, and a fusion section that combines both the motion and semantic features and further learns deep representations for pixel-wise semantic motion segmentation.

Please find the detailed description of the architecture in our IROS 2017 paper.